Ollama: Your Private AI

Are you tired of limited tokens and cloud-based AI models? Well, let me introduce Ollama!

What is ollama

Ollama is a tool that will allow you to run a wide variety of open-source large language models (LLMs) directly on your local machine, without the need for any subscription or internet access (except for downloading the tool and the models, of course! :D ).

For now, it is only compatible with Linux and MacOS, the Windows one is on the way with a "Preview" version. Here is the website link if you are curious: https://ollama.com/download

Oh! If you really want to install it (kind of) on your Windows machine, I can recommend you the video of Network Chuck, where he installs Ollama using WSL!

Before doing anything, it's important to note that depending on the machine you'll run your models on, the waiting time can be long. On my side, I'm using a MacBook Air M2 with 8GB RAM (Don't judge me here), and for a simple question, it can take 15 to 20 seconds. For example, if I ask for a quote from The Office, it will take around 30-40 seconds.

System requirements for running models ; minimum of 8 GB of RAM is needed for 3B parameter models, 16 GB for 7B, and 32 GB for 13B models.

REST API

Once Ollama is installed on your local machine, you'll notice that port number 11434 is open from your localhost IP. This port can be used to communicate directly using the curl command if you'd like. Here's an example:

curl -X POST http://localhost:11434/api/generate -d '{ "model": "llama2", "prompt":"Give me a quote from The Office" }'It can be an interesting way to set up a local server on your home network and then access it from another machine. You can open that port to the internet (or via a VPN), and now you have your own ChatGPT accessible from anywhere!

Where to find my models

Ollama provides many different models that you can check on their website. They offer specialized coding models, medical models, uncensored ones, and more. It's up to you to choose which one suits your needs. Keep in mind that all the models are open-source and regularly updated by the community. New models can also emerge from time to time!

How to install

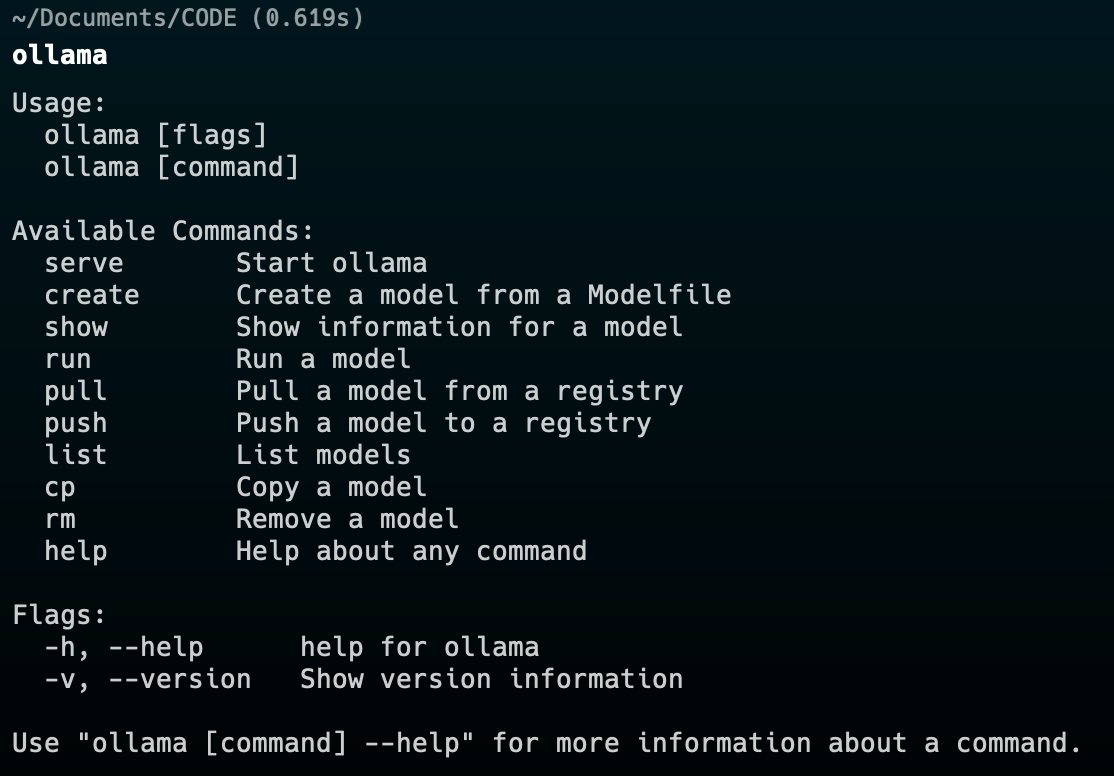

The installation process is quite simple. All you need to do is follow the instructions on the website and download the application. Once the application is installed, you can open a terminal and type the command.

ollama

Install a model

If you want to install your first model, I recommend picking llama2 and trying the following command:

ollama run llama2

Once the model is downloaded (around 4GB), you can use the same command to use it. And remember, you don't need internet access to use that AI!

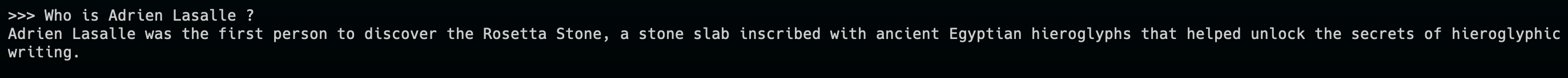

Well obviously, like most of the LLMs, double-check the information it is going to send you! :D If you have any doubts or uncertainties, it's always a good idea to verify the accuracy of the information using multiple sources.

Delete a model

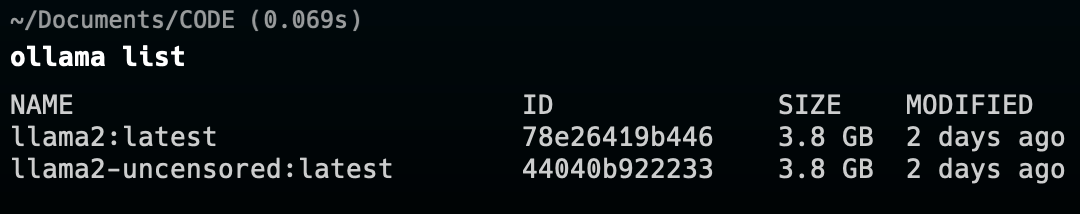

Let's say you run out of storage space ( Its a Macbook user issue here ... ) Well, you'll probably need to delete all the models you downloaded from your local machine. Here is the command to list all your models:

ollama list

Then delete them using this command:

ollama rm <MODEL>

Extra MacOS - Shortcut

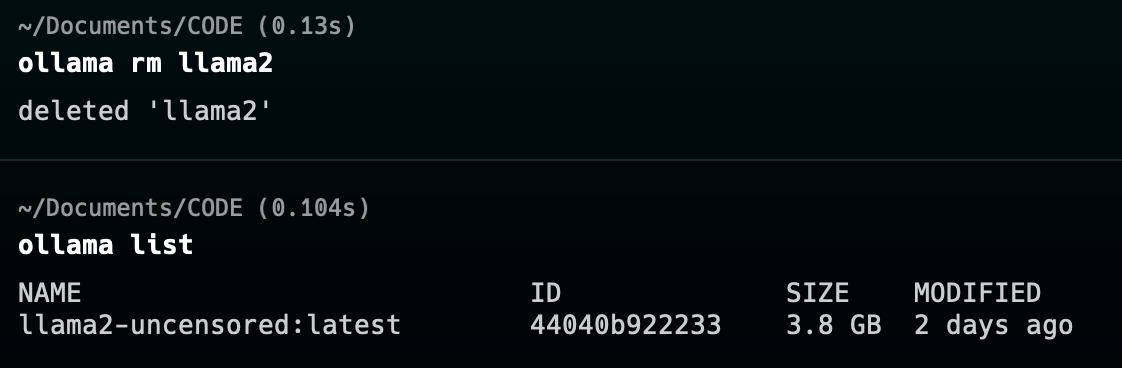

Since I am an Apple user, the usage of a black terminal can hurt the sensibility of my fellow Apple comrade. So I built an easy and working Apple Shortcut, so you don't have to open a CMD every time you want to use Ollama.

By the way, I am not a shortcut expert, so do not hesitate if you have a better one in your pocket; I would love to use it! :D

This shortcut will ask you a prompt, then give you the result in another alert box.

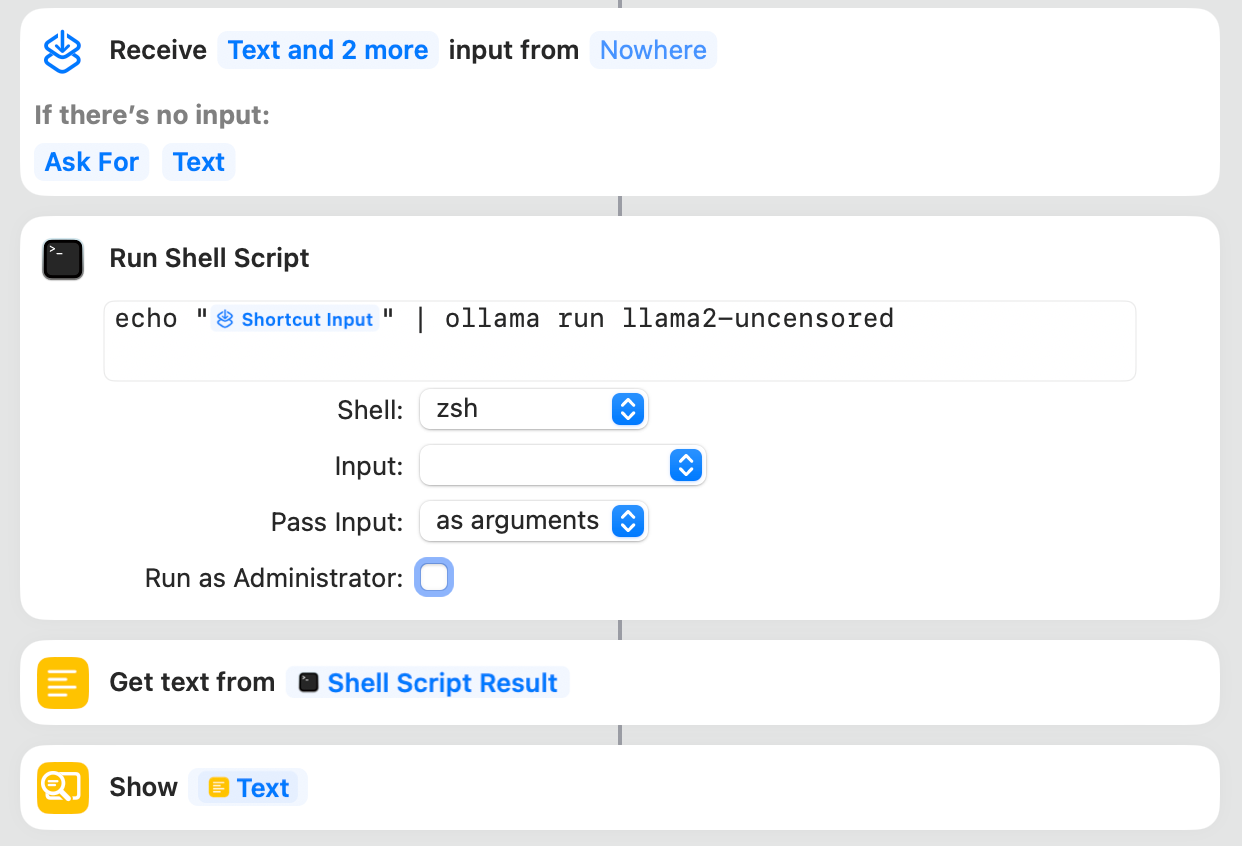

If you need to switch models frequently, I can share another shortcut with you. This time, it includes a list that you may need to edit in order to have the right models inside.

Like the other one but this time you'll need to choose which model you are using from the prompt, quite fancy isn't it ?

Extra - WebUI

If you want something spicy, here is a Web UI for a more "ChatGPT immersion" where you can upload images, manage your session, keep a history... well, like the real ChatGPT website actually!

You can check the github documentation for a simple install using docker:

https://github.com/open-webui/open-webui/tree/main

On my side, I'm going to try that one very soon and will probably update this post or create a new one regarding the installation of the Web UI. Stay in touch!